A “quantum gravity expert” is presumably

someone well acquainted with the details

of our immense ignorance of the subject.

I suppose I count.

~John Baez

I long ago promised that I would discuss some of my own research. Here’s the first post that makes good on that promise. Today I’ll discuss a theory of quantum gravity.

Why Quantum Gravity?

Without a doubt, the two greatest advances in physics in the last 120 years were the advent of general relativity and quantum mechanics. These two amazing theories have totally changed the way we see the world. Quantum mechanics describes the physics of the very small, while general relativity describes the physics of the very massive.

Usually we don’t encounter very small, very massive things. However, they do exist, and we’d like to understand them. Black holes are the quintessential quantum gravitational mystery. A black hole is so incredibly massive that it it pulls all matter within it down to a tiny—perhaps infinitesimally small—point. And this point is small enough that we need quantum mechanics to understand it.

We also need quantum gravity to describe the universe on large scales. Modern cosmology tells us that the universe is expanding, even accelerating. If we extrapolate back to right before the universe began with a bang, it was infinitesimally small. A point. And it was—in a sense—the most massive it is possible for anything to be. We need quantum mechanics to understand this. Indeed, the most successful story we have about the early universe, inflation, relies heavily on quantum mechanics.

The accelerating universe is also a mystery, and scientists hope that quantum gravity will be able to explain it.

Unfortunately, quantum mechanics and general relativity don’t agree. Not at all. Quantum mechanics assumes that quantum particles, described by their wavefunctions, evolve in a static, eternal universe. However, in general relativity, the background itself is a living thing. Space and time reshape themselves according to the stuff contained in the universe. The quantum particles are affected by the changes in shape of the universe and affect the universe in turn, forming a feedback loop. This makes combining quantum mechanics and general relativity extremely hard.

Later, people came up with ideas like string theory, loop quantum gravity, and causal sets, all of which attempt to solve the problem of quantum gravity. But so far, although each theory has its success stories, no one theory has proven itself to be correct… or even predicted anything we can test. The best we can say is that most of them can show they look like general relativity if you take out quantum mechanics.

Needless to say, this problem is hard.

One of the things I work on is a candidate theory of quantum gravity called Causal Dynamical Triangulations, or CDT for short. Here’s how it works.

Adding Up All Universes

I already described one way to handle quantum mechanics, called the Feynman path integral. Classically, a particle takes the path between two points that minimizes (technically extremizes) the energy cost for the particle. In quantum mechanics, the particle is wave and it takes all possible paths between the two points. Then the probability of the particle traveling from the first point to the second point is given by the sum of a function of the energy costs of all possible paths.

We can take this idea and apply it to quantum gravity. Roughly, a classical universe starts with some three-dimensional shape and ends with some three-dimensional shape. It will evolve from the initial shape to the final shape in a way that minimizes (extremizes) the energy cost of that transition. Since the universe is a single shape of spacetime, we think of this sort of like a soap bubble connecting two wire rings. The wire rings force a shape at the beginning and end of the bubble, but the middle of the bubble can be whatever it wants.

So what’s the quantum analog? In quantum gravity, the probability of the universe of evolving from some initial shape to some final shape is given by the sum of some function of the energies of all possible spacetimes that connect the two initial and final shapes. This is called a sum over histories, since we’re summing over all possible histories of the universe.

Unfortunately, this sum over histories is incredibly hard to compute, or even define. Given an initial shape of the universe and a final shape of the universe, there are uncountably many spacetimes that connect the two. How do we sum over all those histories? How do we even find all of those histories? We need some clever tricks to do it.

Adding Up Some Universes

Right now, we don’t know how to find all the spacetimes that should contribute to the sum over histories. As a next best thing, we want to find the spacetimes that contribute the most to the sum. Imagine you take the number 1. You add it to ![]() . Then you add that to

. Then you add that to ![]() , and then

, and then ![]() , add inifnitum. Your sum looks something like this:

, add inifnitum. Your sum looks something like this:

![]()

But pretty quickly the number stops changing when you add more terms to your sum.

![]()

but

![]()

If each successive term in the sum shrinks quickly enough, the sum itself stops growing very quickly at all. If we added new terms ad infinitum, we’d get

![]()

But with only five terms we’re almost there! Although there are infinity more terms to add before we get to the final answer, five terms gives us a darn good approximate answer.

We’d like to do the same with quantum spacetime. We can approximate the sum over all histories by taking the sum only over the histories that add the most to the Feynman path integral. So for now, our goal is to find those histories.

Universe Statistics

Right now, it is not at all obvious which histories contribute the most to the sum. To find them, we take advantage of a correspondance between quantum mechanics and statistical mechanics, called the Wick rotation. I’ve discussed before how in relativity, distances in the time direction square to negative numbers. This means we can think of the time direction as imaginary. (See my previous post on imaginary numbers for more info on what that means.)

But what happens if we make time real again? If the spacetime is sufficiently well-behaved (and it doesn’t have to be!), we can rotate the time axis through the complex plane to make it real. This transforms our spacetime into something we’re more used to, where all the distances square to positive numbers. Later, once we’ve evaluated our sum over histories, we can undo the rotation to find the right answer. This is called a Wick rotation.

Why do we Wick rotate? By changing the time axis to a real axis, our quantum system becomes a classical exercise in probabilities. If we had a humongous bag of Wick-rotated spacetimes (also called Euclidean spacetimes), and we stuck our hand in the bag and pulled a universe out, we could use statistics to figure out how likely it is that we’d pull out a given universe. And better yet, the most likely universes are the ones that contribute the most to the sum over histories if we Wick-rotate back.

So all we need to do is make a bag of Wick-rotated universes and pull universes out of the bag at random. In other words, we need to randomly generate Euclidean universes. And we can do that on a computer.

The Universe On Your Laptop

Spacetime as we know it is continuous. If you take a cube of empty space, and you zoom in on it with your microscope, you could zoom in forever. No matter how close you look, no matter how small the things you’re looking at are, you can always zoom in further and look at smaller stuff. In other words, things can be infinitely small. (We don’t know whether this is actually true. People have proposed a quantum of distance called the Planck length, which might be as small as things get. But we usually treat things as continuous.)

A computer has finite precision, though. It can’t encode things that are infinitely small. Instead we have to stop somewhere. And this means that we have to transform the smooth continuous shape of the universe into something made up of points and lines of fixed, nonzero size.

(To make things easier to understand and visualize, I’m going to drop from four dimensions to three. Now we have two spatial dimensions and one time dimension. This isn’t as crazy as it sounds. A lot of my research has been on three-dimensional quantum gravity. The reason is that the physics is easier, but we can still learn something about the four-dimensional case. Here’s a whole article in Scientific American about why quantum gravity in flatland is a good idea.)

In Causal Dynamical Triangulations, we make a specific choice about how to encode information on the computer. This choice is motivated by making the spacetime “nice” enough to Wick-rotate. For that to be possible, we enforce that there is a well-defined time direction. This sounds obvious, but it’s not always true in general relativity. You can have arbitrarily crazy spacetimes where time loops on itself, or where the time direction depends on where you are in the spacetime. Indeed, in string theory, the basic spacetime, a Calabi-Yau manifold absolutely does not have a well-defined notion of time.

We also enforce that there are no wormholes or baby universes, which also add ambiguity to the notion of “time.”

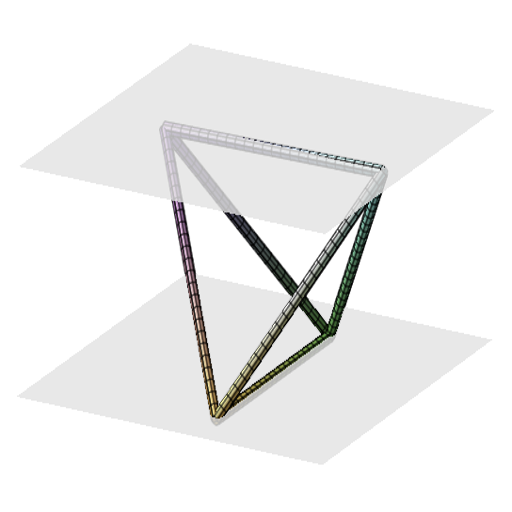

We construct our computerized universe out of equilateral tetrahedra, each of which is a tiny piece of Minkowski space. Each tetrahedron spans two discrete times. The orientation of the tetrahedron determines the effect it has on spacetime. The three possible orientations are shown below. They’re labeled by the number of vertices they have on each time slice. So a ![]() -tetrahedron has three vertices on the lower time slice and 1 vertex on the upper time slice. And so on.

-tetrahedron has three vertices on the lower time slice and 1 vertex on the upper time slice. And so on.

We put the tetrahedra together so that face meets face and edge meets edge—there can’t be any gaps. When we put it all together, the spacetime looks something like the image below. The image doesn’t quite capture what’s going on because the tetrahedra are all the same size and you can’t really pack them together in a flat spacetime. So when the edges look like they’re different sizes, they’re not. This is actually curvature of the spacetime the tetrahedra are supposed to make up.

Measuring Curvature

To figure out which spacetimes are most probable, we need to be able to measure how curved they are. This is an integral piece of Einstein’s theory of general relativity. So let’s step back and think about how we can measure curvature. I’ve discussed before about how it’s possible to measure curvature by looking at angles. Basically, look at a triangle and measure the failure of the interior angles of the triangle to add up to 180 degrees. We can use a similar idea here. However, we look at the interior angles of all tetrahedra that meet at an edge and measure the failure of the sum of those angles to add up to 360 degrees.

Let’s look at an example in two dimensions. A single tetrahedron approximates a sphere. Now three triangles of the tetrahedron meet at a single vertex, as shown below. In flat space, if we rotated around that single vertex, we’d travel 360 degrees. However, the interior angle of each triangle at that vertex is less than 120 degrees and they add up to a smaller number. This tells us the curvature at that point. This is called Regge calculus.

region on a sphere. (B) A tetrahedron is a very crude approximation of a sphere. (C) Somewhat

analogously to the use of a perfect triangle, we can find the curvature at a vertex x on the

tetrahedron by finding the deviation

from 360 degrees of the full angle of rotation around the vertex. We call this deviation the deficit angle.

A Universe Factory

Now we know how to put a universe on our computer. But we still haven’t got a likely universe. The way we get one of those is to take a universe, any universe at all, put it on our computer, and then make random changes to it. Each time we make a change, we measure whether the new, modified universe is more or less likely than the previous universe. If the new one is more likely, we keep the change. Otherwise, we reject it a fraction of the time and keep it a fraction of the time, based on how much less likely it is than the previous universe. This generates a single probable spacetime.

A typical simulation of a single Wick-rotated quantum universe is shown below. The long direction is the time axis and the other two directions show the size of the universe at a given discrete time. The movie is showing the universe evolve from an arbitrary initial configuration to a likely final configuration. About halfway through the simulation, we get to a probable configuration. After that, the changes are just quantum fluctuations around the mean.

This type of simulation is called a Monte Carlo simulation. The set of decisions the program uses to make the simulation go is called the Metropolis-Hastings algorithm.

The Average Universe

Unfortunately, it’s not enough to generate a single likely universe. To perform a sum over histories, we have to average over lots of them. If we do this, we can generate the average Wick-rotated, quantum universe. (We don’t know how to Wick-rotate back, so for now we have to do everything with real time.)

The expected quantum universe in the ground (or lowest energy) state is shown below. (Lowest energy means that the universe is empty and that it evolves from a big bang to a big crunch: nothing to nothing.) I’ve plotted spatial area of the universe as a function of discrete Euclidean time. Don’t worry about the details. I just wanted to show you that the plot is smooth after you average it out, even though each individual universe is pretty bumpy. The error bars show the quantum fluctuations. If we Wick-rotated the universe we lived in now, it would look a lot like this plot… which tells us that causal dynamical triangulations reduces to general relativity when we take away quantum mechanics.

Quantum Hints

So on large scales the universe of causal dynamical triangulations looks like Einstein’s universe. What about on small scales? Something very weird happens if you zoom in close enough… the universe begins to look like a spider web. In the past I’ve talked about the idea of fractional dimension and a way to measure it, called spectral dimension. We can measure the dimension of the universe of causal dynamical triangulations, and we see that it’s not what we expect.

The scale dependence of the dimension is plotted below. On large scales, the dimension is four, like we expect. But as we move to small scales, the dimension drops dramatically… all the way down to 2.8! We don’t really know what’s going on here, but it’s a hint of truly quantum behavior. We expect there to be a “quantum foam” and this might be what we’re seeing.

The State of the Art

Now you know the basics of Causal Dynamical Triangulations. Understanding the theory is an ongoing effort by less than fifty people around the world. So far, we can only simulate empty universes. Some people are working on putting matter into the model. I’m working on studying the probabilities of the universe evolving between different initial and final shapes. Others are working on testing how strict we have to be with the Wick rotation. It’s an ongoing story, so I hope you’ll keep your eyes peeled!

Further Reading

There isn’t much on causal dynamical triangulations. So here’s some further reading on that and on quantum gravity in general.

- The inventors of causal dynamical triangulations wrote an article for Scientific American. The article is here, but it’s behind a paywall. You can find it for free here.

- For a more technical introduction to causal dynamical triangulations, I recommend CDT founder Renate Loll‘s article, “The Emergence of Spacetime, or Quantum Gravity on Your Desktop.”

- For a perspective on Loop Quantum gravity, check out the community’s website.

- The string theorist Sean Carrol often talks about quantum gravity and physics in general in a very accessible manner.

- Lee Smolin is a popularist author on quantum gravity and physics in general. You might want to look at his website.

- I could hardly leave you without pointing you to my mentor in all things quantum gravity, Steve Carlip.

- And here’s a whole blog on quantum gravity!

Play With it Yourself

If you’re especially excited about quantum gravity, and especially brave, you might want to try and run a simulation yourself. We are planning on open sourcing the code in the near future. So, for reference, here’s a link to a (currently locked) github repository.

Here’s the code: https://github.com/ucdavis/CDT

And the documentation I’ve written is here:

https://github.com/ucdavis/CDT/tree/master/documentation

and here:

https://github.com/ucdavis/CDT/tree/master/2p1-fixed-boundaries/documentation

If you use the code, please cite it as ours. The original author is Rajesh Kommu. However other authors include myself, Steve Carlip, Joshua Cooperman, Christian Anderson, David Kamensky, Kyle Lee, and Adam Getchell.

Alas, the majority of the code is in #LISP . You might like that, but most likely, you resent it. Sorry about that.

EDIT: Unfortunately, we have had trouble open-sourcing our code through the bureaucracy of the University. We still plan to release our code, but it is unavailable at the moment. I’m sorry about that.

Questions? Comments? Insults?

I’m afraid that this post might have been less clear than previous posts. It’s certainly longer! So if you have any questions, please let me know so I can clear up the confusion!

I like your post. I also work with CDT models, but from another perspective. Something that I didn’t know is that dimensions shrink- it seems to help me with my model. If you have more links on this subject can you provide me with?

Thanks for reading Ovidu! Sorry for taking so long to reply. Yes, my former advisor Steve Carlip wrote an article on all forms of spontaneous dimensional reduction in quantum gravity. This is probably the place to start. Here’s the link to ArXiv: http://arxiv.org/pdf/0909.3329v1.pdf

What model are you working on?

Thank you Jonah, for the link. I read the paper and found it interesting. Still wonder myself how could I miss dimensional reduction until now. 🙂

I work at a model that use only triangle ( I mean not tetrahedron ) for all dimensions. From here my interest in dimensional reduction.

I see. Very cool! I’m glad I could help a little!

Jonah, thanks for making the concept of CDT accessible for people like me (non-phycisist, but university degree in engineering, not a big fan of complicated mathematics, but very much interested in the deeper understanding of our universe). I’ve read the Feynman book on QED (read it like 4 times front to back), and I understand how he sums over all possible paths, but I don’t see in CDT if there are any rules for the creation and destruction of the 4-simplices or tetraheda. I only see rules for gluing them together. How do you control the number of 4-simplices in each timestep?

Probably I will read your post again a couple of times, maybe then I will understand CDT better. Thanks for people like Lee Smolin, Renate Loll and you for daring to pursue your own mind instead of doing physics mainstream, I get a lot more satisfaction reading about CDT than I do reading about the Standard Model or String theory.

Kind regards,

Bart (Belgium)

Thanks for reading, Bart! This is a really good question, and I completely glossed over this detail. We don’t completely fix the number of simplices. Indeed we can’t. The way we make small random changes to a spacetime is by adding or removing simplices in a way consistent with the rules for gluing them together. Instead we arrange our algorithm that adds or removes simplices such that the probability of a simplex being added is the same as the probability of a simplex being removed so that, on average, the total spacetime volume remains constant. Does that help?

Jonah, I asked that question because I thought the cosmological constant would somehow play a role in defining the number of simplices. I assumed that in an accelerating universe like ours, the number of simplices would continue to grow thereby expanding empty space. Is this a wrong way of thinking about CDT?

Bart, that’s great insight! Yes, the cosmological plays a role, although it’s pretty complicated.

The cosmological constant controls how the spacetime grows in physical time. However, computer time, i.e., each iteration of the algorithm, is different. Each spacetime generated by the algorithm is made of both space and time and, yes, in general, it behaves like an accelerating universe should. The computer time steps make small random changes to a complete universe that exists for an amount of physical time. The computer keeps track of the entire history of the universe at each algorithm step.

However, there’s another piece to the puzzle, too. The cosmological constant does partly control the total number of simplices in the spacetime through the “action.” The action is, roughly, the energy cost of a given spacetime in the Feynman path integral. This means that the action controls the probability of a given spacetime emerging in the simulation, relative to other spacetimes. The cosmological constant term makes large spacetimes more probable. On the other hand, the action also includes a curvature term, which comes from attractive gravity feeding on itself, which makes small, crumpled up universes more likely. To keep the number of simplices fixed fixed, we carefully balance these two terms, making them bigger or smaller relative to each other so that the probability of the number of simplices increasing is equal to the probability of the number of simplices decreasing.

You might wonder if this controls the cosmological constant to the point of being unphysical. However, it’s more complicated than that. The numbers we need to choose to balance the probability of growth against the probability of shrinkage depend on the number simplices and the amount of discrete time we allow the spacetime to occupy.

The way to think about it is this way: the simplices are a numerical artifact to allow us to study quantum gravity. In the physical world, the number of time slices and the number of simplices would be infinite. So when we increase the number of time slices and the number of simplices to very large numbers (in a specific way to keep their ratio fixed), the cosmological constant that we need to set to keep the number of simplices at a fixed number should approach its physical, measured value.

Sorry, this is all pretty technical, so if you have any questions, please let me know. Hope this helps!

Thanks for that answerJonah. It indeed is much more complicated than I would have imagined. I do want to ask about the validity of simulating an empty universe: I once read that the total amount of energy in our universe should equal zero (the lower the energy, the longer in time a quantum fluctuation may exist). Vacuum energy represents the negative energy, and causes space to extend according to the ‘action’ you described, while matter and radiation represent positive energy. Is it not possible that the ‘curvature’ term would not be necessary when you introduce matter in the equations? I personally imagine empty space as always expanding tetrahada, and matter and radiation needing to constantly ‘consume’ these tetraheda in order to survive, thereby creating a balance between eternal expansion through vacuum energy and contraction due to matter and positive energy. For me, this would explain where gravity comes from: matter ‘consuming’ the empty space between them. I realize this might be a very non-conventional way at looking at things, but I go ahead and ask the question anyway, hope you don’t mind. Best regards,

Bart

Bart, I am so sorry I missed this! You must think I was ignoring you! I’m not sure if you’re still checking my blog… but somehow I just missed that you posted this comment.

In answer to your question, it’s valuable to simulate an empty universe because we can compare the result to what we would get without general relativity–only with quantum mechanics.

We would need the curvature term in the action even with matter, since general relativity tells us that mass is equal to curvature. However, it may very well be that if we included matter it would behave just right so that we no longer need a cosmological constant (dark energy) term. This would be extremely nice!

As for your unconventional picture of gravity, I’m sorry I can’t really comment. It’s very hard to evaluate conceptual ideas without some math behind them because the theory needs to hold predictive power. All I can say is that Einstein’s general relativity works extremely well to explain gravity, so I’m trying to build off of that.

It seems that one of th emost pressing reasons to do quanutm gravity is to tell us what happens inside blakc holes and at the big bang. What imprsees me about LQG is that they appear have clear answers to these questions i.e a bounce. Whether this is the correct answer of course remains to be seen.

Does CDT give us any kind of answer to these question yet?

Thanks for the reply, BBB.

No, CDT can’t answer these questions yet. One issue is that the number of people working on CDT is quite small. Another reason is that, although CDT simulations are easier to carry out than LQG calculations, it is difficult to interpret a simulation and to design it in such a way as to test these questions.

I’d argue that, given the number of people studying CDT, it has made much more rapid progress than LQG or String Theory. However, i’ts one thoery. It’s new, and in quantum gravity, progress is slow.

Thanks for the reply Jonah. I have another questions you say that wormholes and baby universes are not allowed in CDt. Is that an assumption in order to allow you to do calculations or is it fundamentally disallowed by the theory?

Im thinking of the recent suggestion that entanglement and wormholes are connected, does CDt rule this out? What about eternal inflation which is claimed to make an infinite number of baby universes , is that ruled out in CDt too?

That’s a good question, BBB. I’d say the answer isn’t totally clear. You see, people have tried this “sum over histories” approach before CDT. The previous iteration was just called “Dynamical Triangulations” although we now call it “Euclidean Dynamical Triangulations” to make the distinction clearer.

In Euclidean Dynamical Triangulations, we did not forbid wormholes or baby universes. And we also treated “time” differently in a way that made the “Wick rotation” ill defined. The results were disastrous. Euclidean Dynamical Triangulations was unable to predict a nicely-behaved de Sitter universe like CDT can. Instead all simulations could generate were infinitely “crumpled up” universe or universes that were flattened, like a pancake.

CDT is sort of the “fixed” version of Euclidean Dynamical Triangulations, and we’ve added a lot of restrictions. It’s not totally clear how many of these restrictions can be relaxed. For instance, Renate Loll and collaborators demonstrated that a fixed “time direction” is not completely necessary:

http://arxiv.org/abs/1307.5469

So the restrictions we’ve imposed like no wormholes and no baby universes certainly make computation easier… But it’s not clear what combination of the restrictions we impose make computation POSSIBLE. It’s also not clear if “making the computation possible” means nature prefers these restrictions. It’s something we still need to explore.

As for inflation, that’s a different sort of “baby universe.” In that case, every piece of space is still “connected” as one fabric. It’s just that some pieces of the universe are growing extremely rapidly, and some pieces are growing more slowly. CDT doesn’t rule that out.

I hope this helps!

Yes thanks very much

Jonah, I think your posts are quite well composed and useful for those less deeply involved in the topic (like Me). I have fiddled around with CDT couple of years back during my MSc thesis, and I put together a simple 3D (euclidean) CDT simulator for GPUs. My interest was mainly from the computational side (as I have graduated as an IT-Physicist -> a strange breed whose physics skills are somewhat reduced in favor of good programming skills). Now my field is completely different (fluid dynamics), but I bumped into your blog recently and wanted to take a look at that lisp code, but it doesn’t seem to be available. Would it be possible to put it back to github or could you send me a copy of the code? (I can still browse it on http://code.ohloh.net/, but a downloadable repository would be better.)

Thanks,

Zega

Thanks! I’m glad you enjoyed the article. Sorry, we made the github repository private for a while so that we can sort out licensing issues.

If you promise not to distribute it until we open source it again, I can send you the codebase as a tarball.

That would be very nice of you! I won’t distribute it, you can be sure of that. You can reach me at: zavodszky – AT – hds.bme.hu

Okay. I’ve sent it. Let me know if you have any trouble!

Thanks! (Naturally if I happen to make any useful modification I will contribute it back.)

Thanks, that’d be great!

Very nice post Jonah!

Thanks, Adam! How are you?

Hey folks,

I’ve recently been on an particle physics/cosmology binge and am very interested in the CDT model as it incorporates concepts of fractal geometry which seem to be missing in the more mainstream cosmological models and formulations of quantum gravity. As my background is in biology, I’d like to point out an article published in Science (1999) entitled The Fourth Dimension of Life: Fractal Geometry and Allometric Scaling of Organisms. The article basically deals with scaling relationships of biological systems across vast orders of magnitude. There’s a common theme in biology of maximizing surface area to volume relationships, which in turn maximizes nutrient/waste exchange across systems. Nature apparently likes to do this with the use of fractal branching networks (or virtual fractal branching networks in cases of unicellular organisms) and the article reaches the conclusion that living systems behave like 4D systems in a 3D universe (neglecting the temporal dimension). Natural selection seems to have used fractal branching networks to its advantage by expanding the surface area component of dimensionality, sort of like how a crumpled sheet of paper can be used to approximate a 3D sphere.

I’m curious if you think there’s a link to this and your research in physics. The universe seems to be fractal-ish across very large scales and these patterns appear naturally almost everywhere. While there’s no selection process to these patterns outside of biological systems, it seems like the universe has a tendency to approximate higher dimensions. I’ve heard of extra dimensional theories in physics as ways to explain the weakness of gravity compared to other fundamental forces but I wonder if these putative dimensions are simply illusory or an emergent property of a universe with fractal tendencies. It’s rather surprising that a lot of physicists tend to regard fractals as coincidences of nature and the subject isn’t as studied as well as it perhaps could be. Is it possible that gravity is fundamentally different from the other forces because it is amplified via fractal geometry at large scales (not sure if I phrased that properly)?

Thanks for the insightful comment, Tian Wang! And for the pointer to the Science article!

(For those of you who have journal subscriptions, here’s the link:

http://www.sciencemag.org/content/284/5420/1677.short

It’s definitely worth a read.)

In the case of CDT, the fractal geometry actually behaves inversely to how it works in biology. Spacetime is made up of discrete chunks, each of which is 4-dimensional. On large scales, there are enough of these chunks that the universe looks like a smooth 4-dimensional shape. But if we go to small enough scales, we find that these chunks are arranged in such a chaotic, fractal-like fashion that the dimension actually DROPS down to 2 or 3.

What’s happening on these small scales is not exactly clear. My mentor, Professor Carlip, suggests that dimension drops because of the CAUSAL structure of these little discrete chunks. Matter can only travel forward in time, not backwards, so if the path of “forward” is very narrow and perhaps very wild and curvy, then the number of places one can go is restricted.

(For a bit more, see my article on fractional dimension here: http://www.thephysicsmill.com/2013/01/20/you-cant-get-there-from-here-dimension-and-fractional-dimension/)

There is one approach to quantum gravity, called Causal Set Theory, where fractal dimension works the way described in the Science article. In Causal Set Theory, the universe is fundamentally a discrete network of single points, called “events.” The only thing relating two events to each other is “causality.” Given two events, we know that either one is before the other, or they are “causally disconnected” meaning one can’t cause the other. From the description of these causal relationships, an entire smooth, four-dimensional spacetime emerges on large scales. There aren’t really any lay descriptions of this, but I can point you to a review article or two:

http://arxiv.org/pdf/gr-qc/0309009.pdf

http://arxiv.org/pdf/gr-qc/0601121.pdf?origin=publication_detail

As for other approaches to quantum gravity like string theory or Kaluza-Klein theory, I honestly can’t say if there’s a connection to fractal geometry. I’m afraid I just don’t know enough about these approaches. That said, I think it’s an interesting idea! And I do agree, that perhaps physicists need to look more closely at fractals.

Oh, for reference, here’s Professor Carlip’s article on why the dimension might drop on small scales in quantum gravity:

http://arxiv.org/abs/0909.3329

Thank you for the article, Jonah, that has introduced the concepts behind this theory to me. I just have a question concerning it, how does the uncertainty principle factor in to this? Would it apply to each of the simplices as if they were a quantum particle? What effect would this have on the spacetimes generated by the theory? Perhaps I misunderstood something in the article.

Thanks.

Thanks for reading, James! The uncertainty principle is built in. Let me explain.

In general relativity, the analog of position is the 3-metric, which describes the shape of space at a given time. The analog of momentum is something called the “canonical momentum,” which is how the 3-metric changes in time.

These two quantities obey an uncertainty relation just like the Heisenberg uncertainty principle for particles.

It’s difficult to see how that appears in CDT until you remember that we can’t measure these quantities for a SINGLE spacetime in our ensemble of spacetimes. We can only measure statistics on many spacetimes. And the statistics work out so that any certainty we have in the 3-metric is balanced out by uncerainty in the canonical momentum.

Does this help?

Yes, thank you Jonah, this helps a lot, and is very eye opening and interesting.

Thank you Janah for such a wonderful article explained in very simple format.

I am a CAD/CAM engineering student and highly interested in Quantum Physics and Astro Physics.What are other fields to explore as a hybrid of CAD/CAM and Physics?

Hi Sandeep, thanks for reading!

In general, computers are increasingly important for physics. The techniques I describe here are also used to make predictions about the properties of matter both at the molecular and subatomic level. If you’re interested in that kind of thing, take a look at Monte Carlo methods as applied to physics:

https://en.wikipedia.org/wiki/Monte_Carlo_method_in_statistical_physics

People also do more deterministic computer simulations, such as the flow of gas around a star or in a supernova. Or as the motion of two black holes emitting gravitational waves. E.g., numerical relativity:

https://en.wikipedia.org/wiki/Numerical_relativity

Does this help?

Great post ! Thank you for all the interesting details. I realise it is more than 2 years old: are there any new development since then?

Another question: I don’t understand the part about dimensions… I thought the dimensions were fixed from the start to 2+1 (after all that’s how the tetrahedrons are defined) so what does it mean that it ends up having fewer dimensions on small scales? And how can a structure have different dimensions on different scales? Isn’t part of a 3D space always a 3D space? All this is very puzzling to me.

Thanks! Yes, CDT has been chugging along. There is, for example, evidence that the restrictions on what kind of spacetimes you can construct don’t need to be quite as extreme:

http://www.sciencedirect.com/science/article/pii/S0370269313004711

That’s the most exciting development in my opinion. But lots of work is being done and lots is being accomplished.

Regarding your questions about dimension. Yes, the dimensions are fixed to 2+1. The dimension we measure is called “spectral” dimension, or Hausdorff dimension. These are measures of how easy it is to move through the spacetime. Without obstruction, it’s easier to move through a three-dimensional space than a two-dimensional space. But if things are obstructed it can be as if you’re in a lower-dimensional space. That’s what spectral dimension quantifies. I wrote about it here:

http://www.thephysicsmill.com/2013/01/20/you-cant-get-there-from-here-dimension-and-fractional-dimension/

Thank you. I had heard about fractal dimensions during my curriculum but it looked somehow like a mathematical trick to me. The idea of obstruction and diffusion makes it more intuitive… Yet I still wonder if the talk of dimension is a mere analogy of if they’re “really” dimensions, i.e. degrees of freedom (for example, would it be conceivable, at least in principle, that we live in an “obstructed” high dimension space that only appears to have 3 dimensions, or is it a concept of a different nature than “our” ordinary dimensions?) Popularisation articles on quantum foam often don’t make that distinction…

I hope my question isn’t too philosophical… 🙂

Right. So let me see if I can offer an analogy. Think about a diatomic molecule. In three dimensions, it has seven degrees of freedom: translation in 3 directions, rotation around three axes, and stretching/shrinking of the atomic bond. However, at low temperatures, the rotational degrees of freedom get “frozen out” because there’s not enough energy to access them.

You can think of spectral dimension SORT OF the same way. The true dimension, which we call the topological dimension, is fixed by the simulation. But at short length scales, the spacetime constructs itself in such a way that we cannot access all degrees of freedom.

You ask about whether our universe only appears three-dimensional and might actually have a higher topological dimension. And indeed, that’s the postulate of Kaluza-Klein theory (on which string theory is based). The idea there is that our universe may have more dimensions, but we cannot access them because they are so small and curled up. In other words: because we do not have enough energy. I wrote about that here:

http://www.thephysicsmill.com/2013/04/28/stuff-from-shape-kaluza-klein-theory/

Yes I’ve heard about Kaluza Klein, my question was if this fractal dimension thing could have the same result even though all the dimensions are extended… Now with your answer I suppose it is not really different (dimensions are “obstructed” in any case)?

Anyway thank you very much for your answers and your posts!

Well they go in opposite directions. Kaluza-Klein theory gives us access to extra dimensions as energy scales rise. In contrast, quantum foam takes them away. Anything exotic must happen at high energies, because dimensional changes that happen at low energies are experimentally ruled out.

And you’re most welcome. 🙂

That’s interesting thanks 🙂

Thank u for this Article its really useful one

Film Dhamaka

Hi,

I have a question but it is probably very naive. Also, I am asking years after your first.

Since CDT tries to have foliation of spacetime into Spacelike hypersurface and time, how would you have a (general) definition of Black hole ?

I mean a general definition of black hole can’t be made, as far as I understand, by any hypersurfaces, as its boundary is a global property of the spacetime.

Also, I enjoyed reading your article and learning about the ideas of CDT.